This blog was last updated on 8 September 2025

This blog is presented by Twin Science, a global education technology company empowering educators through AI-enhanced learning solutions.

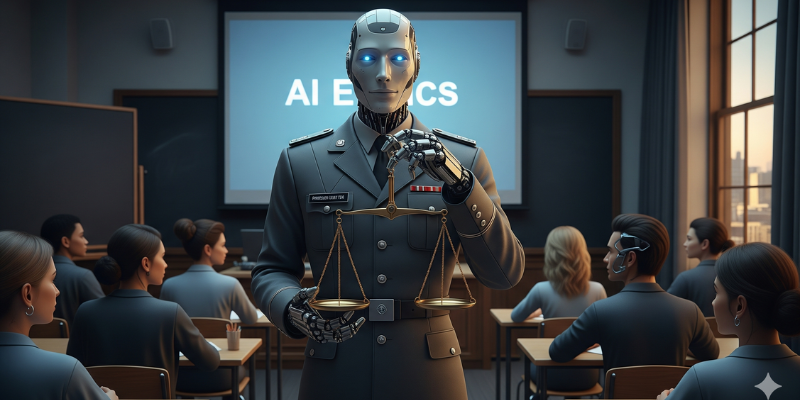

Why Are Ethical Dilemmas Emerging in AI Use for Education?

As AI becomes a regular presence in classrooms, it doesn’t just raise hopes, it raises questions. Teachers ask: Is it fair for an algorithm to guide student progress? Who ensures privacy? What happens if bias creeps in?

These aren’t just technical issues; they’re ethical dilemmas. And for educators already balancing tight schedules, the uncertainty around AI use can feel overwhelming.

This is why exploring AI in education requires more than knowing how tools work, it demands understanding the values behind them. With classroom-ready AI tools designed for equity, safety, and pedagogy, you don’t have to navigate this gray space alone.

What Are the Main Ethical Challenges in AI Use?

Educators often face three gray areas when adopting teacher AI tools:

1- Bias & Fairness: Does the AI treat all students equally, regardless of background or ability?

2- Data Privacy: How is sensitive student information protected? Who has access?

3- Transparency: Can teachers and students understand how AI makes its decisions?

These concerns aren’t abstract, they affect daily teaching. For instance, an AI system that recommends math exercises may unintentionally assign harder tasks to some groups of students while giving fewer opportunities to others. Without oversight, such tools risk reinforcing inequities.

How Can Teachers Navigate These Ethical Dilemmas?

The key is not rejecting AI, but using it wisely. Ethical AI use in education often means:

-

Human-in-the-loop: Keeping teacher judgment central, using AI insights as guidance, not replacement.

-

Clear boundaries: Setting transparent rules on what data is collected and how it’s used.

-

Inclusive design: Choosing tools that adapt to diverse learning needs and reflect a wide range of student experiences.

Research suggests that AI has the potential to foster inclusion when thoughtfully applied. That means educators play a vital role: asking questions, monitoring outcomes, and ensuring technology supports, not dictates, the learning journey.

How Does This Align With Twin’s Learning Vision?

At Twin Science, we believe education isn’t just about building skills; it’s about building conscience. Our vision of a double-winged generation emphasizes not only competence in STEM but also a sense of social responsibility.

AI should reflect this balance. A student who uses an AI-powered simulation to explore renewable energy isn’t just learning science, they’re also developing empathy for the planet. Ethical use of AI means helping children grow into innovators who create with both skill and conscience.

Where Do We Go From Here?

The ethical gray areas of AI in education won’t vanish overnight. But they don’t need to paralyze progress either. By asking tough questions, setting boundaries, and choosing responsible edtech learning solutions, you can make AI a trusted ally.

It’s time to move beyond “Is AI safe?” and toward: “How can AI help us raise learners who are skilled, fair, and socially conscious?”